Supplementary Figures¶

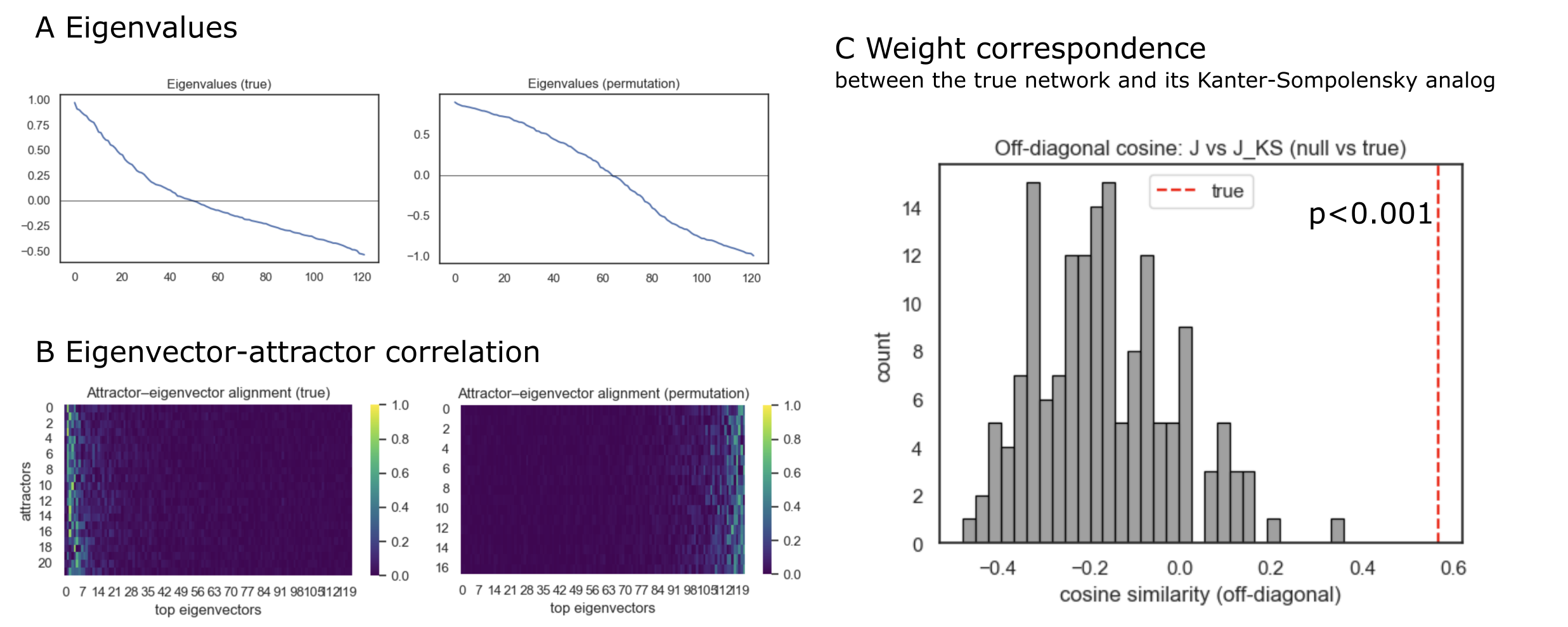

Figure 1:Eigenstructure and projector tests of the fcANN

A: Eigenvalue spectra of the empirical coupling matrix J (left) and null model 1 (coupling matrix based on phase randomized timeseries data, recalculated for each permutation) (right). B: Eigenvector–attractor alignment calculated from the empirical (left) and phase-randomized data (right). Attractors were obtained by deterministic relaxation from random initial states (with collapsing sign‑duplicates); alignment is the absolute cosine between collapsed attractor vectors and the top eigenvectors of J. C: Weight correspondence between J and its Kanter–Sompolinsky (K–S) analog. From the measured attractors we formed Σ (columns are attractors) and C=ΣᵀΣ/N, then computed the pseudo‑inverse projector . Similarity was quantified as the cosine between the off‑diagonal elements of J and . The gray histogram shows the null distribution from null model 2 (symmetry‑preserving permutations of J, but see Source notebook for similar results with null model 1, i.e. phase randomized timeseries data); for each of the 1000 permutations we recomputed the own attractors of the surrogate network and . The red dashed line marks the empirical value; the one‑sided p‑value is the fraction of null cosines ≥ the empirical cosine. The empirical network shows stronger eigenvector–attractor alignment and substantially higher J↔J_KS off‑diagonal correspondence than the null, consistent with approximate K–S projector behavior.

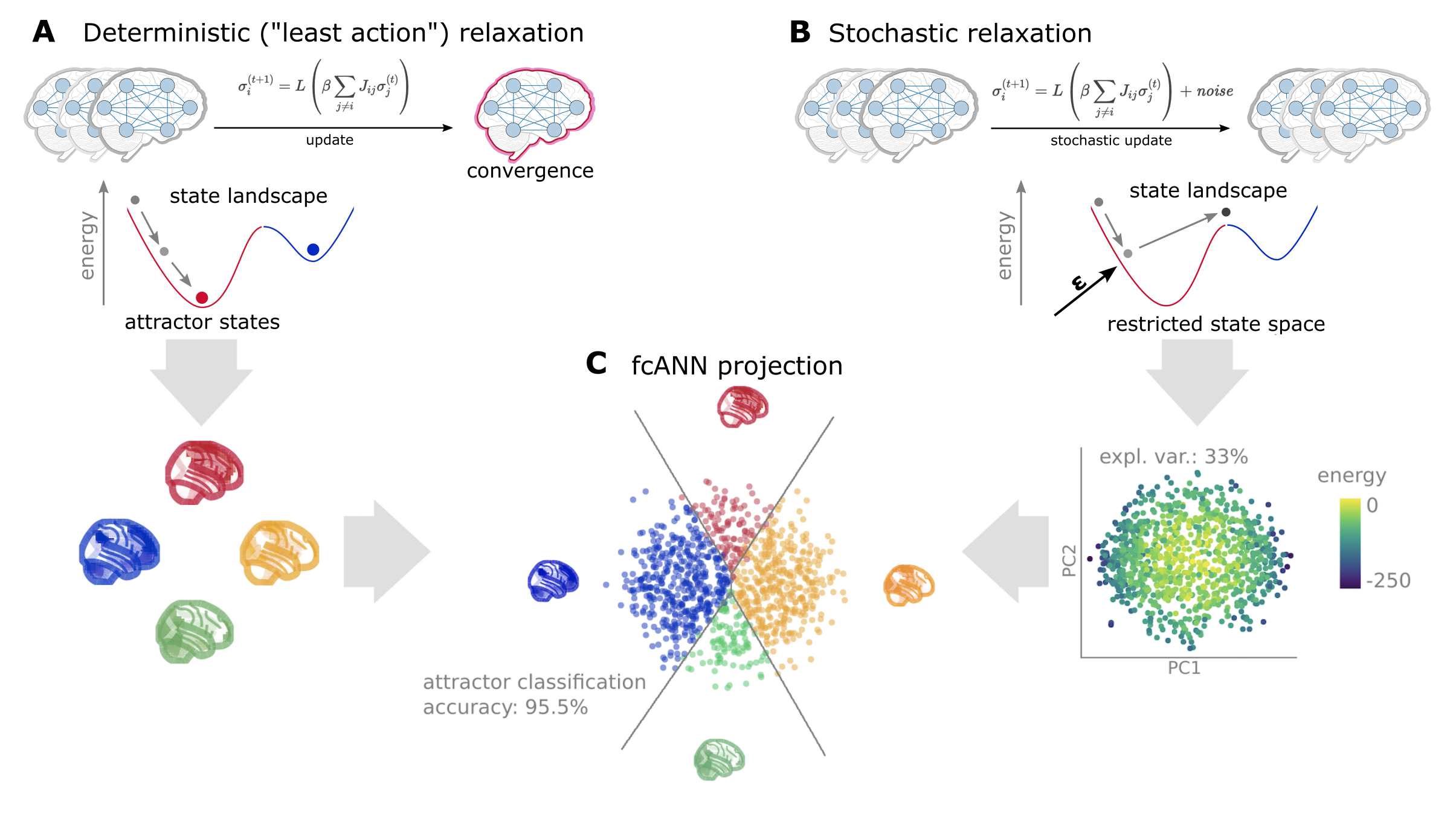

Figure 2:Schematic representation of the fcANN projection. The fcANN projection is a 2-dimensional visualization of the fcANN dynamics, based on the first two principal components (PCs) of the states sampled from the stochastic relaxation procedure. The first two PCs yield a clear separation of the attractor states, with the two symmetric pairs of attractor states located at the extremes of the first and second PC. To map the attractors’ basins on the space spanned by the first two PCs, we obtained the attractor state of each point visited during the stochastic relaxation and fit a multinomial logistic regression model to predict the attractor state from the first two PCs. The resulting model accurately predicted attractor states of arbitrary brain activity patterns, achieving a cross-validated accuracy of 96.5% (permutation-based p<0.001). The attractor basins were visualized by using the decision boundaries obtained from this model. We propose the 2-dimensional fcANN projection as a simplified visual representation of brain dynamics, and use it as a basis for all subsequent analyses in this work.

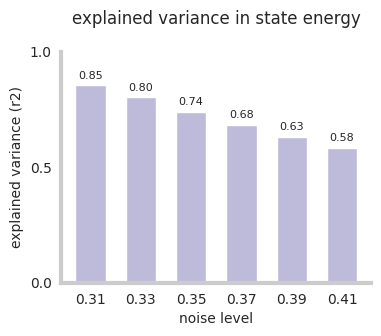

Figure 3:Explained variance in state energy by first two principal components. See supplemental

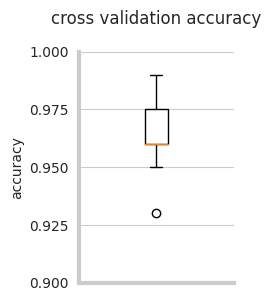

Figure 4:Cross-validation classification accuracy of the fcANN, when predicting the attractor state from state

activation. See supplemental

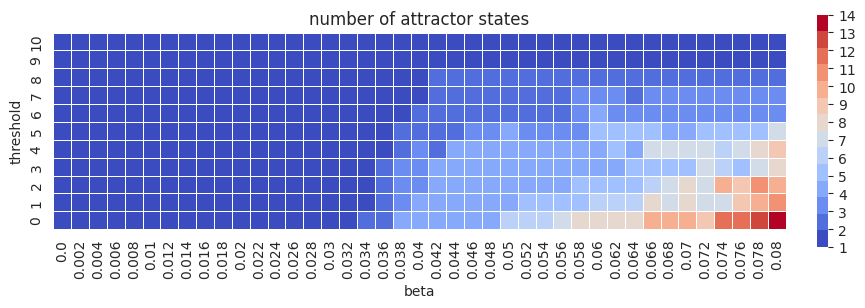

Figure 5:Parameter sweep of fcANN parameters threshold and beta. The number of attractor states is color‑coded. See supplemental

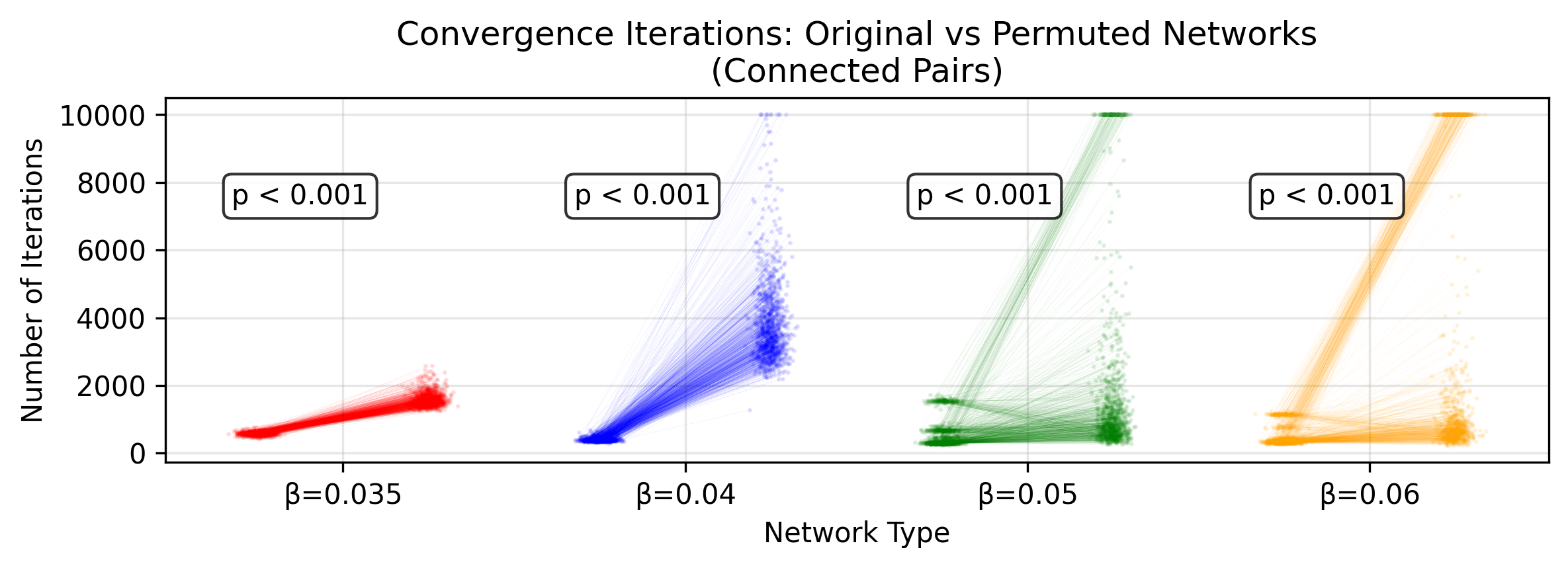

Figure 6:fcANNs initialized with the empirical connectome have better convergence properties than permutation‑based null models. We investigated the convergence properties of functional connectome‑based ANNs in study 1 by contrasting the number of iterations until reaching convergence to a permutation‑based null model. In more detail, the null model was constructed by randomly permuting the upper triangle of the original connectome and filling up the lower triangle to get a symmetric network (symmetry of the weight matrix is a general requirement for convergence). This procedure was repeated 1000 times. In each repetition, we initialized both the original and the permuted fcANN with the same random input and counted the number of iterations until convergence. Each point on the plot shows an iteration number; the lines connect iteration numbers corresponding to the original and permuted matrices initialized with the same input. Statistical significance of the faster convergence in the empirical connectome was assessed via a one‑sided Wilcoxon signed‑rank test (i.e., a non‑parametric paired test) on the paired iteration values (1,000 pairs), with the null hypothesis that the empirical connectome converges in fewer iterations than the permuted connectome. The whole procedure was repeated with and 0.6 (providing 2–8 attractor states). See convergence

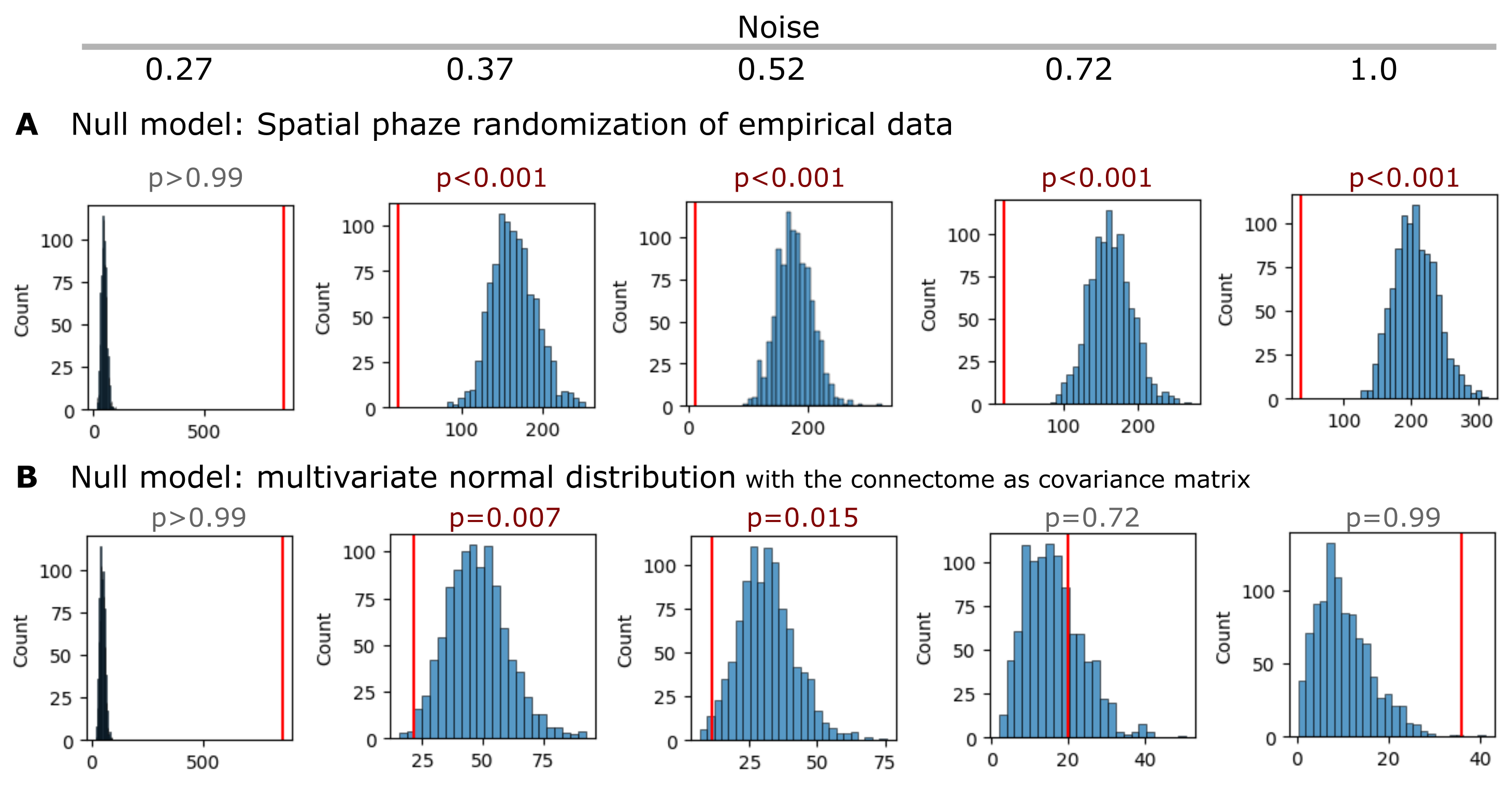

Figure 7:Statistical inference of the fcANN state occupancy prediction with different null models.

A Results with a spatial autocorrelation-preserving null model for the empirical activity patterns. See null_models.ipynb for more details.

B Results where simulated samples are randomly sampled from a multivariate normal distribution, with the functional connectome as the covariance matrix, and compared to the fcANN performance. See supplemental

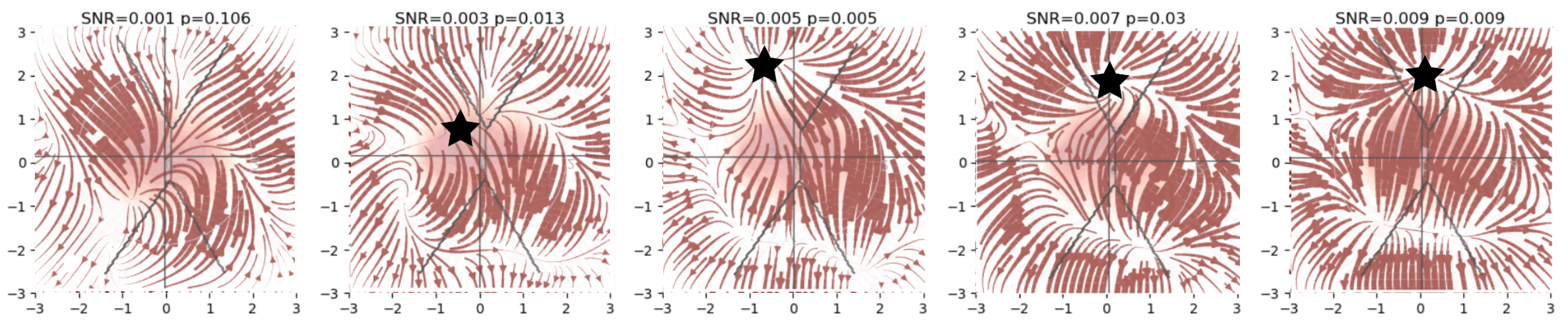

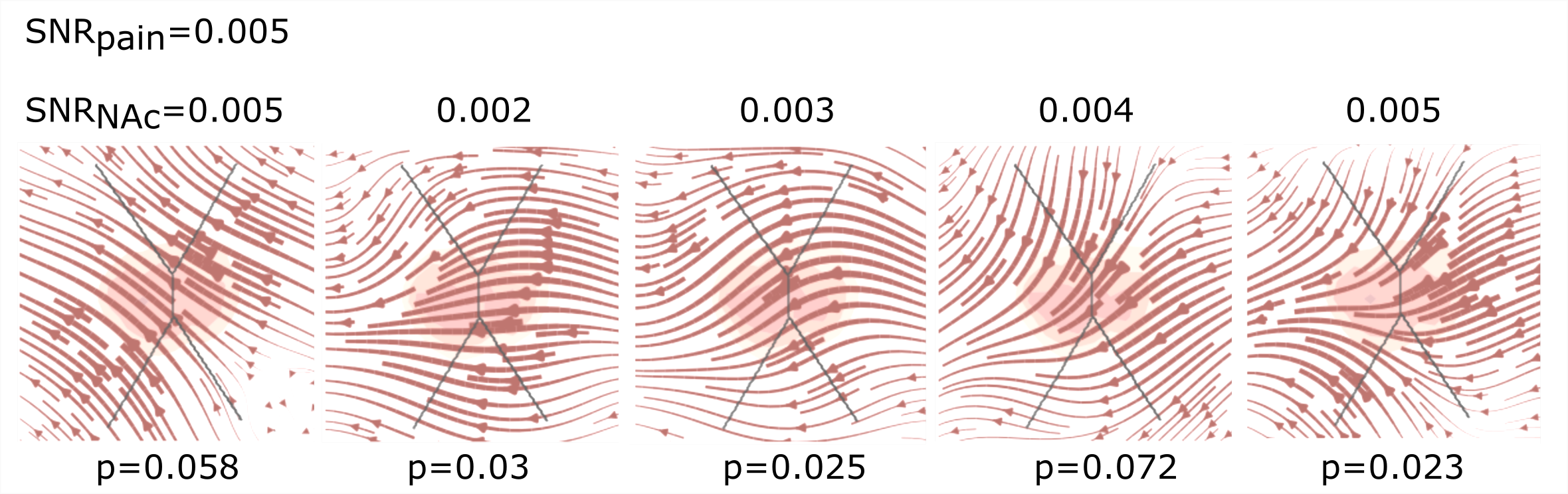

Figure 8:FcANN can reconstruct the pain “ghost attractor”. Signal-to-noise values range from 0.003 to 0.009. Asterisk denotes the location of the simulated “ghost attractor”. P-values are based on permutation testing, by randomly changing the conditions in a per-participant basis. See main_analyses.ipynb for more details.

Figure 9:fcANN can reconstruct the changes in brain dynamics caused by the voluntary downregulation of pain (as contrasted to upregulation) Signal-to-noise values range from 0.001 to 0.005. P-values are based on permutation testing, by randomly changing the conditions in a per-participant basis. See main_analyses.ipynb for more details.

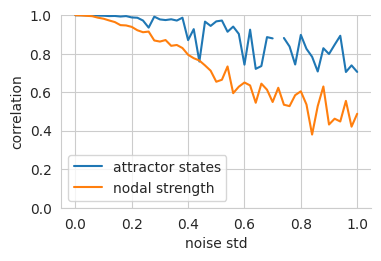

Figure 10:Robustness of the fcANN weights to noise.

We set the temperature of the fcANN, so that two attractor states emerge and iteratively add noise to the connectome.

To account for the change in dynamics, we adjust the temperature (beta) of the noisy fcANN so that exactly two states emerge. We then highlight the decrease in nodal strength of the noisy connectome (the fcANN weights) as a reference metric

vs the correlation of the attractor states that emerge from the noisy connectome. See supplemental

Figure 11:All significant differences of the mean state activation analysis on the ABIDE dataset; label denotes the region

in the BASC122 atlas. See supplemental

Supplementary Tables¶

Table 1:MRI acquisition parameters. TR: repetition time; TE: echo time; FA: flip angle; FOV: field of view; EPI: echo‑planar imaging; SPGR: spoiled gradient recall; SENSE/GRAPPA/ASSET: parallel imaging factors. Study 5-7 are metaanalyses or multi-center studies with varying data. Sequence parameters for these studies are available in the respective publications.

Parameter | Study 1 | Study 2 | Study 3 | Study 4 |

|---|---|---|---|---|

Scanner / head coil | Philips Achieva X 3T; 32‑ch | Siemens Magnetom Skyra 3T; 32‑ch | GE Discovery MR750w 3T; 20‑ch | Philips Achieva TX 3T; head coil per site |

Anatomical sequence | T1 MPRAGE | T1 MPRAGE | T1 3D IR‑FSPGR | T1 SPGR (high‑resolution) |

Anatomical TR / TE | 8500 ms / 3.9 ms | 2300 ms / 2.07 ms | 5.3 ms / 2.1 ms | — / — |

Anatomical resolution / FOV | 1×1×1 mm³; 256×256×220 mm³ | 1×1×1 mm³; 256×256×192 mm³ | 1×1×1 mm³; 256×256×172 | — |

Resting‑state EPI TR / TE / FA | 2500 ms / 35 ms / 90° | 2520 ms / 35 ms / 90° | 2500 ms / 27 ms / 81° | 2000 ms / 20 ms / — |

Phase enc. | COL | A>>P | A>>P | — |

FOV (voxels × slices) | 240×240×132; 40 slices | 230×230×132; 38 slices | 96×96×44; 44 slices | 64×64; 42 slices |

Slice thickness / gap / order | 3 mm / 0.3 mm / interleaved | 3 mm / 0.48 mm / interleaved | 3 mm / 0 mm / interleaved | 3 mm / — / interleaved |

Acceleration / fat suppression | SENSE 3× / SPIR | GRAPPA 2× / Fat sat. | ASSET 2× / Fat sat. | SENSE 1.5× / — |

Volumes / dummies / scan time | 200 / 5 / 8 min 37 s | 290 / 5 / 12 min 11 s | 240 / 0 / 10 min | — / — / — |

Table 2:Neurosynth meta-analyses. The table includes details about the term used for the automated meta-analyses, as well as the number of studies included in the meta-analysis, the total number of reported activations and the maximal Z-statistic from the meta-analysis.

search term | num. studies | num. activations | max. Z |

|---|---|---|---|

pain | 516 | 23295 | 14.8 |

motor | 2565 | 109491 | 22.5 |

auditory | 1252 | 46557 | 25.3 |

visual | 3110 | 115726 | 15.4 |

face | 896 | 31842 | 26.8 |

autobiographical | 143 | 7251 | 15.7 |

theory of mind | 181 | 7761 | 15.1 |

sentences | 356 | 13461 | 16.5 |

Supplementary Methods¶

Study 4 instructions for upregulation. “During this scan, we are going to ask you to try to imagine as hard as you can that the thermal stimulations are more painful than they are. Try to focus on how unpleasant the pain is, for instance, how strongly you would like to remove your arm from it. Pay attention to the burning, stinging and shooting sensations. You can use your mind to turn up the dial of the pain, much like turning up the volume dial on a stereo. As you feel the pain rise in intensity, imagine it rising faster and faster and going higher and higher. Picture your skin being held up against a glowing hot metal or fire. Think of how disturbing it is to be burned, and visualize your skin sizzling, melting and bubbling as a result of the intense heat.”

Study 4 instructions for downregulation. “During this scan, we are going to ask you to try to imagine as hard as you can that the thermal stimulations are less painful than they are. Focus on the part of the sensation that is pleasantly warm, like a blanket on a cold day. You can use your mind to turn down the dial of your pain sensation, much like turning down the volume dial on a stereo. As you feel the stimulation rise, let it numb your arm, so any pain you feel simply fades away. Imagine your skin is very cool, from being outside, and think of how good the stimulation feels as it warms you up.”

Supplementary Information 1¶

Derivation of the joint steady-state probability of free energy minimizing attractor networks See Spisak & Friston, 2025 for details on the whole framework.

Outline¶

Setup: Continuous–Bernoulli kernel¶

We assume that the single-site conditional distribution is the Continuous–Bernoulli on with canonical parameter

and density (for )

with and (so the limit is uniform on ).

The conditional mean is:

1. Master equation¶

Let’s consider a precise formalization of the asynchronous update procedure: each site has an independent Poisson clock of rate . When it rings, is replaced by a draw .

With the configuration equal to but with coordinate set to , the transition density is

The master equation for is

2. Probability currents¶

Let’s denote the site-wise flux density as:

At steady state , by definition we have:

3. Detailed balance condition¶

A special (and maybe the most intuitive) way to satisfy the previous eq. is the case of detailed balance or equilibrium. In this case, every transition is balanced:

It can be shown that:

I.e., the log-density changes by the local slope at (see detailed derivation below).

Hence for :

Mixed partials commute, thus:

Therefore, equilibrium (detailed balance) is possible only when the coupling is symmetric.

4. Non-equilibrium Steady State (NESS)¶

Detailed balance (equilibrium) is only one specific way the steady-state condition can hold:

Let’s consider the general case: an arbitrary (possibly asymmetric) coupling. can always be decomposed into:

This leads to a decomposition of the edge currents on each directed edge :

so that and .

Since , define rates accordingly:

so and , .

We proceed in two steps: (i) the antisymmetric component is divergence-free and never changes the steady state; (ii) the remaining symmetric component yields exactly the same as the equilibrium distribution obtained by setting . Finally, as a bonus, we show that the induced circulating flow is indeed tangential to the level sets of , in accordance to the Helmholtz decomposition.

i) Antisymmetric component is divergence-free (does not change ).¶

Starting again from stationarity of the full current,

subtract the same identity written with in place of . Because on every edge, its contribution vanishes pairwise, giving

Thus has zero divergence and does not change .

ii) Symmetric component determines and matches the equilibrium solution with .¶

From Section 3, only can appear in a potential gradient (commuting mixed partials). Therefore

which integrates to the same you obtain by enforcing detailed balance with the symmetric coupling (i.e., in the absence of ). Equivalently, the symmetric current is reversible with respect to and alone reproduces the same steady state.

Note: Tangency to level sets of (no work against the potential).¶

Multiply the preceding identity by a test function and integrate over (“summation by parts”) to obtain

Taking yields

so the solenoidal current is orthogonal to discrete gradients and flows tangent to the isocontours of (the level sets of ).

Link to .

Under the linear Continuous–Bernoulli parametrization, cannot contribute to any scalar potential (mixed partials must commute). Therefore, varying at fixed leaves and unchanged; it only affects the circulating, divergence-free component .

Conclusion: explicit steady-state for arbitrary ¶

By the results above, only the symmetric component can shape the potential . Therefore, for arbitrary (possibly asymmetric) , the non-equilibrium steady-state density takes the Boltzmann-like form:

with and partition function

The antisymmetric component contributes only to divergence-free (solenoidal) probability currents tangent to the level sets of and thus does not alter . This expression matches the manuscript’s stationary density, with playing the role of the effective (symmetric) interaction matrix.

Supplementary Information 2¶

Here we provide a concise derivation of how and changes as a consequence of free energy minimization resulting in an inference (node update) rule and a local, incremental learning rule, respectively.

For more background and a detailed derivation, see Spisak & Friston, 2025.

Let the instantaneous net input to node be

and let be the variational marginal with mean , where is the Langevin function (the mean of the Continuous–Bernoulli).

Inference (Hopfield-style)¶

We start by writing up the local variational free energy from the point of view of a single node :

Next we express:

with mean .

and

As it depends on only through the linear slope :

Now, we assemble the local free energy (dropping constants independent of ):

Equivalently, using and ,

Differentiate w.r.t. and use to cancel terms:

Setting the derivative to zero gives and therefore the node-wise update

which is the stochastic Hopfield/Boltzmann-style activation with the Langevin nonlinearity.

Learning (Hebb − anti-Hebb)¶

Make the dependence on explicit by adding and subtracting the CB log-partition (using and Bayes’ rule):

Now

and by the chain rule with we obtain

Using a stochastic (sample-based) estimate and descending gives the local update

This is a predictive coding-based learning rule that strengthens observed correlations and subtracts those already predicted by the current model.

Supplementary Information 3¶

Self-orthogonalization of attractor states

To illustrate how the learning rule in free energy minimizing attractor networks gives rise to efficient, (approximately) orthogonal representations of the external states, suppose the network has already learned a pattern , whose neural representation is the attractor and associated weights . When a new pattern is presented that is correlated with , the network’s prediction for will be . Because inference with converges to and is correlated with , the prediction will capture variance in that is ‘explained’ by . The learning rule updates the weights based only on the unexplained (residual) component of the variance, the prediction error. In other words, approximates the projection of onto the subspace already spanned by . Therefore, the weight update primarily strengthens weights corresponding to the component of that is orthogonal to . Thus, the learning effectively encodes this residual, , ensuring that the new attractor components being formed tend to be orthogonal to those already established.

For more details on self-orthogonalization in these networks, including an empirical demonstration, see Spisak & Friston, 2025.

Supplementary Information 4¶

Reconstruction of the attractor network from the activation timeseries.

We start from Eq. (1), written in matrix notation:

To complete the square, we added and subtracted within the exponent.

We recognize that . Now add and subtract

Given that , the expression simplifies to:

Note that the term is independent of ; we have absorbed it into the normalization constant.

This is exactly the exponent of a multivariate Gaussian distribution with mean and covariance matrix , meaning that the weight matrix of the attractor network can be reconstructed as the inverse covariance matrix of activation timeseries of the lower-level nodes:

- Spisak, T., & Friston, K. (2025). Self-orthogonalizing attractor neural networks emerging from the free energy principle. arXiv. 10.48550/ARXIV.2505.22749