Simulation 4

Resistance Catastrophic forgetting¶

import numpy as np

import pickle

from simulation.network import AttractorNetwork, Langevin, relax

from simulation.utils import fetch_digits_data, preprocess_digits_data, continous_inference_and_learning, run_network, evaluate_reconstruction_accuracy, vfe, report_network_evaluation, performance_metrics

from simulation.utils import continous_inference_and_learning

from copy import deepcopy

import pandas as pd

import matplotlib.pyplot as pltLoad in simulations¶

From Simulation 2

results_filename = 'parameter_search_results.pkl'

# Optionally, load the results back to verify (or use in a later session)

try:

with open(results_filename, 'rb') as f:

results = pickle.load(f)

print(f"Results successfully loaded from {results_filename}")

# You can now use loaded_results instead of results if needed

except Exception as e:

print(f"Error loading results: {e}")Results successfully loaded from parameter_search_results.pkl

results_filename = 'performance_results.pkl'

# Optionally, load the results back to verify (or for later use)

try:

with open(results_filename, 'rb') as f:

performance_results = pickle.load(f)

print(f"Successfully loaded results from {results_filename}")

# You can add a check here, e.g., comparing len(results) and len(loaded_results)

except Exception as e:

print(f"Error loading performance results: {e}")Successfully loaded results from performance_results.pkl

Pick a simulation case from the optimal regime¶

evidence_level = 11

inverse_temperature = 0.1668

# Find the entry in performance_results that matches the specified evidence_level and inverse_temperature

matching_entry = None

for i, entry in enumerate(performance_results):

# Use np.isclose for floating point comparison

if np.isclose(entry['params']['evidence_level'], evidence_level, rtol=0.01) and \

np.isclose(entry['params']['inverse_temperature'], inverse_temperature, rtol=0.01):

matching_entry = entry

break

print(matching_entry)

training_output_modified = deepcopy(results[i]['training_output'])

nw = deepcopy(matching_entry['nw'])

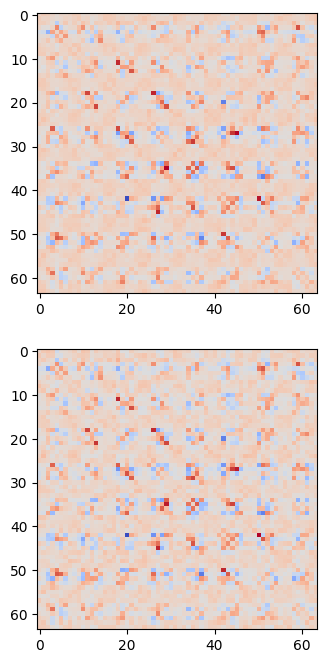

fig, axs = plt.subplots(2, 1, figsize=(10, 8))

axs[0].imshow(nw.get_J(), cmap='coolwarm')

J_before = nw.get_J()

num_steps = 50000 # just like during training

learning_rate = 0.001 # just like during training

inverse_temperature = 1 # increased

for i in range(num_steps): #keep on running with zero bias

nw.update(inverse_temperature=inverse_temperature, learning_rate=learning_rate, least_action=False)

axs[1].imshow(nw.get_J(), cmap='coolwarm')

J_after = nw.get_J()Fetching long content....

upper_tri_indices = np.triu_indices_from(J_before, k=1)

J_before_upper = J_before[upper_tri_indices]

J_after_upper = J_after[upper_tri_indices]

np.corrcoef(J_before_upper, J_after_upper)[0,1]np.float64(0.9728280402261207)